Can technology bridge the Mental Healthcare Gap?

By Arunima Rajan

Mobile applications are the next big frontier. Can mental health apps replace human-led therapy and interventions?

Anupama, in her twenties, lives in Kottayam, a small town in Kerala. She was diagnosed with bipolar disorder a couple of years ago, and has since been using medication to manage the symptoms, the only way known to most medical practitioners to address the symptoms of the condition.

It was only recently, several years after her condition was diagnosed and she was given a treatment plan, that she was able to sign up for an app-based mental-health service. She was very keen to do this.

Anupama chose a service that enables her to manage her triggers without compromising her personal information.

Ever since she signed on to the service, she noticed that while she has been having episodes, she now feels more in-charge and aware of her emotions. She is quick to figure out when something isn’t right because her application notifies her about trends in her sociability and mood patterns. Before things escalate, she gets a message that lists options for consultation with available mental health therapists, and is able to get help immediately. Today, Anupama is in her late 30s.

The pills are part of her treatment plan, of course, but the ability to keep track of symptoms, and recognise trends or prompts, has made her feel more empowered.

Anupama isn't alone. The World Health Organisation (WHO) has some alarming statistics that underscore the need for scalable mental health solutions. Globally, 5% of adults suffer from depression. Depression is a leading cause of disability worldwide and now is the leading cause of global mental and physical disability. It contributes to the global burden of disease. 1 in 13 people globally suffer from anxiety. And anxiety disorders are the most common mental disorders worldwide.

John Torous, Director of Digital Psychiatry, Beth Israel Deaconess Medical Centre

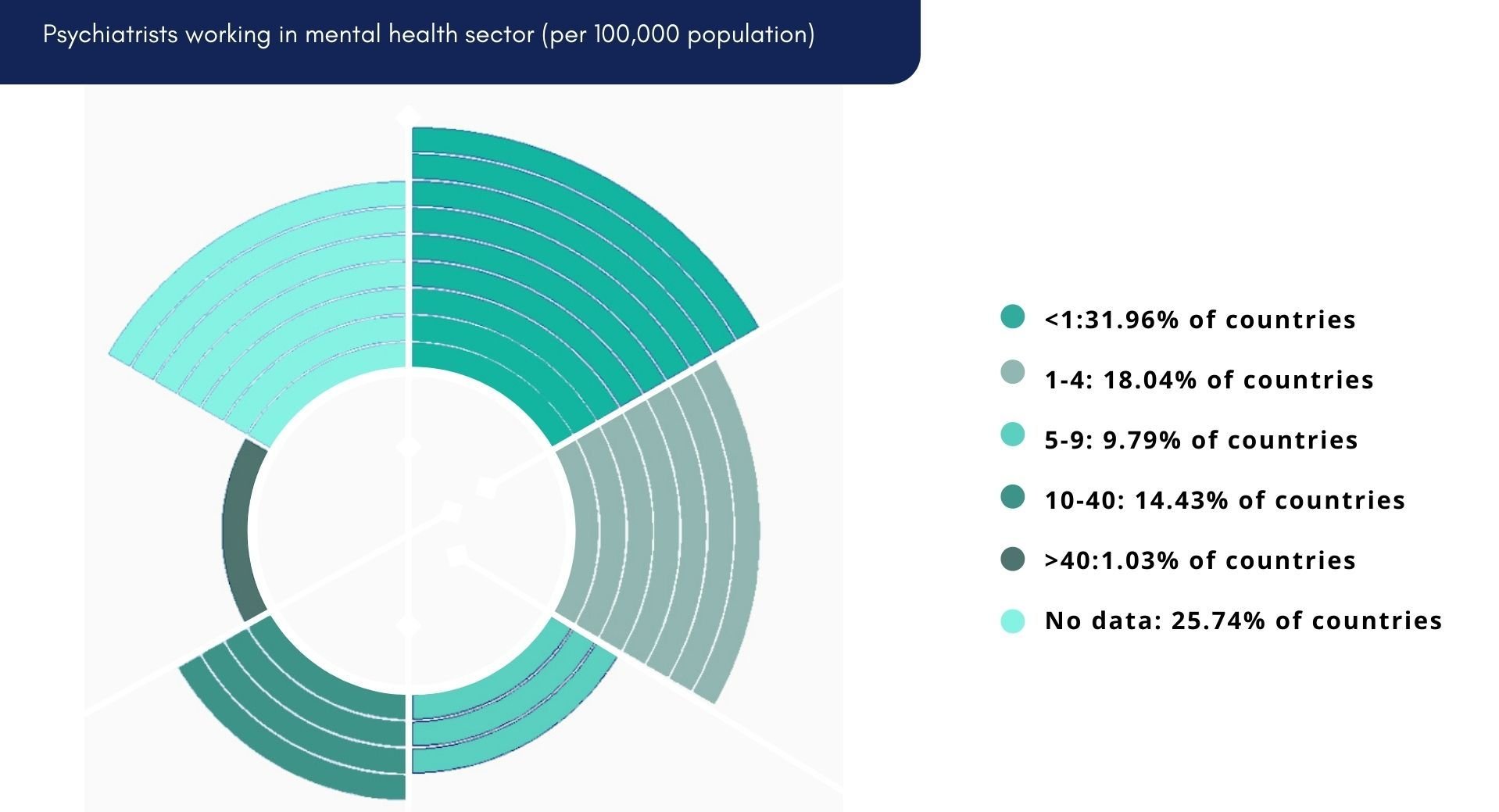

“Globally, there are simply not enough mental health clinicians. To reach everyone, we need technology to help,” says John Torous, director of digital psychiatry at Beth Israel Deaconess Medical Centre, a teaching hospital of Harvard Medical School.

Unmet needs

A senior research fellow at the George Institute India, Oommen John believes there is a yawning gap in mental health service-related delivery in the country. Innovations, particularly digital health platforms, he says, have a critical role to play. “We need to be cognisant that digital health tools are only an enabler to connect service providers to those utilising the services.” To truly address the market, service delivery capacity needs to be increased by ensuring trained and competent mental health professionals are always on call, he adds.

In certain mental health conditions, emerging technologies, such as voice signature-based detection of moods; pattern recognition of changes in activities of daily living, and affective computing, that uses facial expressions to detect and monitor emotional health, play a very critical role, feels John. While these technologies are promising, and hold long-term value, he believes validating these in real-life contexts for India, should be the immediate focus.

John notes that there are already several digital-healthcare platforms offering mental-health screening and psychological support through virtual care delivery models. “And during the Covid-19 pandemic many of these scaled quite substantially,” he adds.

RANGE OF TECHNOLOGY IN MENTAL HEALTHCARE SPECTRUM

Shun the Stigma

Pramod Kutty, CEO and Co-founder, Connect2MyDoctor

The issue with mental health is closer than we think and can affect anyone anywhere, says Pramod Kutty, CEO and Co-founder, Connect2MyDoctor, adding, “We have seen many instances where patients who live hardly 5-10 km away use technology to consult psychiatrists and psychologists online.” Owing to the pervasive nature of mental illnesses, the target audience for mental health apps can be pretty much anyone who needs help, he adds. However, despite the web’s reach, internet infrastructure and technology literacy are two big areas that need to be addressed in the mental health technology model, he adds. Given the level of stigma around mental health conditions, technology platforms have an immense role in behavioural interventions, observes Oommen John. “A person who is depressed is unlikely to be seeking out for care, but they are likely to be looking for coping strategies or seeking information about the condition; these behaviours could be easily used as markers to “discover” the silently suffering populations and support them by providing appropriate linkages, by way of help and interventions.”

To this end, the Telemanas project under the Telemental Health Initiative of the Ministry of Health and Family Welfare, led by the National Institute of Mental Health and Neurosciences (NIMHANS), is a promising opportunity, offers Oommen John. “The George Institute is in talks with NIMHANS and we are working towards a nationally scalable platform,” he adds.

In India, language plays a critical role in access to services, observes John, therefore, any digital mental health platform needs to consider how to overcome these barriers. Fortunately a number of start-ups now offer vernacular interfaces that allow natural language processing. “Interactive chat-bots might also play a crucial role in behavioural interventions to nudge and provide on-going real time support to those needing behavioural interventions. However, the human-to-human connection is also very crucial in the case of a sensitive topic, such as mental health, adds John.

Tech-based care can help reduce the treatment gap for those who live in regions where there are very few mental health services, provided they have access to the requisite digital infrastructure needed, says Ankita Lalwani, a research associate at the Centre for Mental Health Law & Policy (CMHLP), Technology can be leveraged to connect people through various forms of peer support or community-based resources, with the benefits of anonymity, convenience, and lower costs. Technology can also significantly aid in research, to help better understand the predictors of suicide, and other mental health problems, and develop effective interventions accordingly. “Interventions using technology such as EMAs (Ecological Momentary Assessments), ML (Machine Learning), and AI (Artificial Intelligence) can help in identifying more complex causal pathways of mental health problems and suicidal behaviors. This research can be vital for designing more targeted interventions. However, there are massive ethical implications for this kind of use of technology and we need to deliberate a lot more on what the collateral costs and implications are,” she adds.

Public Health Failure

India with a population of 1.4 billion has about 7,000-8,000 psychiatrists, 8,000-10,000 psychologists and 3,000-4,000 other mental health professionals. “About 75 per cent of these are in the metros and big cities while the rest of the country is largely underserved. These areas, however, have access to smartphones and the internet, so technology, through education, awareness and provision of mental health services can reach them,” says Dr Milan H Balakrishnan, consultant psychiatrist and counsellor at the Bombay Hospital and Research Centre.

So, technology can reach the population that doesn’t have access to good mental healthcare.

He further elaborates: “The problems with the current model are that most mental health apps are built by software professionals who don’t understand mental health. They use apps to glean data or quickly monetise, which doesn’t happen easily in the case of services, such as mental health, leading to the apps soon being dumped and becoming redundant. Also, they do not focus enough on patient education.”

Shekhar Saxena, Professor, Practice of Global Mental Health, Global Health and Population, Harvard TH Chan School of Public Health

Investment, especially public-funded, in mental health is low across the world, but especially so in low- and middle-income countries, including India, says Shekhar Saxena, the Professor of the Practice of Global Mental Health, Global Health and Population, Harvard TH Chan School of Public Health. “India has enough expertise and funds to improve mental-health outcomes - these just need to be utilised better. India needs to spend at least 5 per cent of its total health budget on mental health – at present, it spends less than 1 per cent,” he explains. This observation is radical in its assessment of the need gap, contrary to the common opinion that India faces an acute shortage of trained manpower in the mental healthcare space.

The central and state governments’ budgeted expenditure on the health sector reached 2.1 per cent of GDP in 2021-22, against 1.3 per cent in the previous fiscal, according to the Economic Survey 2021-22.

Arjun Kapoor, Programme Manager and Research fellow, Centre for Mental Health Law & Policy (CMHLP), Indian Law Society

Arjun Kapoor, programme manager and research fellow at the Centre for Mental Health Law & Policy (CMHLP), Indian Law Society, Pune, further adds that there are many barriers to providing effective mental healthcare in India. “For one, there is a complete lack of political will to prioritise mental health as a governance issue through evidence-based policy making. 93 per cent of the funds allocated under the National Mental Health Programme in the year 2019-2020 remained unutilized.”

Mental health is still understood through a biological and medicalised approach which focuses primarily on medication and curative services rather than community-based models of care and prevention, he explains.

Amit Malik, Founder and CEO, InnerHour

Amit Malik, founder and CEO of mental health start-up InnerHour, notes, during his clinical psychiatry practice and managing large-scale health services, one of the biggest challenges was the delay in seeking professional help. “When I started looking at numbers in India more broadly a few years ago, I found almost an 80-90 per cent mental health service gap in India, that is 90 per cent of those who need mental health services don’t get access to the help they need because of lack of awareness, self and social stigma, cost, accessibility, and quality.”

“The motivation for starting InnerHour was to address these challenges,” he adds. Inner Hour offers self-help tools, therapy sessions with psychologists, psychiatric consultations, supervised peer-support communities, and an employee wellness program.

InnerHour provides psychological therapy and counselling in 8+ Indian languages. “Additionally, we work with a large number of partners, including employers, educational institutions and insurance companies,” says Malik. App subscriptions and therapy sessions are also priced competitively.

“To evaluate efficacy, the app collects a range of data across offerings - including outcome scores, regular assessments and reassessments, user feedback, in-app feedback, user interviews and session feedback,” Malik adds.

End of human-led therapy?

Internet infrastructure can solve the problem of accessibility and help in establishing a connection with the patient. “While we believe healthcare is best provided in person, technology can be used in combination with human-led therapy,” says Connect2MyDoctor’s Kutty.

“Mobile apps can help with mild to moderate levels of emotional distress. However, the therapeutic alliance developed between a therapist and client during the session, and the human element of a therapeutic relationship is not fully achieved through apps,” says Dr Rituparna Ghosh, Consultant, Psychologist, Apollo Hospitals, Navi Mumbai. “Regular feedback, post therapy sessions, to monitor the client’s progress are mandatory in any therapy practice. If only apps are used without human support, they might help in dealing with the concerns, but significant improvement might be challenging, especially for severe psychological concerns,” adds Ghosh.

Source: : https://www.weforum.org/whitepapers/empowering-8-billion-minds-enabling-better-mental-health-for-all-via-the-ethical-adoption-of-technologies

Therapy is a nuanced, adaptable, one-on-one engagement that helps to find solutions to various problems and isn’t a one-size-fits-all scenario. It can’t be entirely replaced by Artificial Intelligence. For starters, there are not enough large sample sizes and effective data to establish the effectiveness of these apps. Apps can add value to those aspects of therapy that are more time intensive, such as patient education, relaxation exercises and mindfulness practices, feels the Bombay Hospital and Research Centre’s Dr Balakrishnan, who is also the founder of Mindcares, which works to raise mental health awareness in India. Dr Balakrishnan notes that the use of tech is still in a learning phase, and this learning will help frame policies and regulations in future..

“Our team created mindapps.org to help people evaluate apps and allow them to select what is most important. We cannot replace human-led therapy with an app today. Self-help apps can be useful, but the impact is not large for most people. There is better support for apps used as part of care. This suggests that having human support is important for more effective care,” Harvard Medical School’s Torous adds. “Digitally-delivered mental-health support can help, but only as an adjunct and not by itself. Apps are not a replacement for investment in mental health,” he emphasises. According to him, most mental health apps are non-evidence-based and can actually compromise privacy of user data.

While technology has the potential to improve access to healthcare, including mental healthcare, it is not a complete solution, according to Arjun Kapoor of the Centre for Mental Health Law & Policy (CMHLP), Indian Law Society, Pune. “Technology improves reach and helps disseminate information and resources to underserved communities, but the reach of digital and online interventions is limited to people who have access to digital devices and, in many cases, to the internet as well.” The internet penetration rate in India is around 50 per cent, meaning that half the population in our country does not have access to the internet. There is also a huge gender digital divide in India, most pronounced for women in rural areas and lower-income households according to Kapoor. It is important to add that women are nearly twice as likely as men to be diagnosed with depression, according to Mayo Clinic, a non-profit academic medical centre focused on integrated health care, education, and research.

Kapoor's colleague Ankita Lalwani, a research associate at CMHLP, seconds his views. “Globally, we’re seeing a proliferation of mental health apps – we know this is a rapidly growing billion-dollar industry which is commoditizing the discourse on mental health. But we really need to think about what we mean by ‘successful’ apps.”

She adds: “What may be considered as ‘successful’ in terms of the market or commercially is not necessarily evidence-based or up to scientific standards of effectiveness leading to better mental health outcomes. Commercial success should not be confused with efficacy or with being evidence-based. In fact, there is scant evidence to prove that mobile apps are actually effective as standalone interventions in preventing or managing mental health problems.”

Ankita Lalwani, Research Associate, CMHLP

Technology in mental healthcare poses several ethical and privacy concerns such as protecting users’ privacy rights, how data is processed, what it is processed for, who it is shared with (role of third parties), taking informed consent for collecting or processing data, and taking reasonable security measures to prevent data breaches. Mental health apps often collect and process a large volume of data, including personally identifiable information and this poses many data security risks. This data, if collected without meaningful informed consent, not handled carefully or if shared with third parties (for commercial or non-commercial purposes) can violate privacy rights, lead to data breaches, profile individuals or unethically enable profit by the use of personal data for unauthorised purposes. Interestingly, the Joint Parliamentary Committee on the Personal Data Protection Bill, 2019 recognizes that violation of privacy rights can be ‘psychologically detrimental’ for users and lead to depression, anxiety and suicides.

“At the same time, one also recognizes the importance of data for scientific research and developing evidence-based interventions for better mental health outcomes. So, this is a complex issue which needs a lot more thought and deliberation by multiple stakeholders. Currently, India does not have a comprehensive legislation on data protection. The Information Technology Act, 2000 and its rules such as the Sensitive and Personal Data Information Rules (SPDI), 2011 and Intermediary Guidelines, 2021 (mentioned in short) provide some guidelines for ensuring data protection. For instance, the SPDI rules very clearly mention that data related to physical, physiological or mental health conditions, sexual orientation and medical records/history classify as sensitive or personal data and information of a person which must be protected subject to the provisions of the IT Act and Rules. Additionally, the Health Data Management Policy under the National Digital Health Mission provides guidelines for management of health data and records. However, the existing law and policy framework is inadequate and ambiguous as far as regulating entities and third parties which are compromising privacy of users in the context of mental health. The Mental Healthcare Act, 2017 recognizes the right to confidentiality of persons with mental illness subject to exceptional situations. It also limits the disclosure of personal information to the media without the prior consent of the person. The Personal Data Protection Bill 2019 (currently in Parliament) will have implications for regulating the privacy rights of citizens. Several concerns have been raised about how the Bill dilutes privacy rights of citizens. It remains to be seen what implications a comprehensive personal data protection legislation is likely to have for regulating technology-led mental health in India. The mental health sector needs to look at this more closely,” concludes Kapoor.

Source: https://www.weforum.org/whitepapers/empowering-8-billion-minds-enabling-better-mental-health-for-all-via-the-ethical-adoption-of-technologies

Support Clinicians

Krishna Veer Singh, Co-Founder and CEO, Lissun

Resources, such as guided meditations, sleep stories, ambient sounds, educational and educational videos can act as a first line of defence for anyone dealing with a less severe form of mental health, such as stress, anxiety, or a temporary disturbance of emotions. To this effect, Krishna Veer Singh, Co-Founder and CEO of Lissun, feels the time is right for the mental healthcare industry to flourish and take the pressure off the fragile primary healthcare infrastructure.

“However, specialised apps, such as Lissun take it a step further. It not just helps you evaluate the severity of your mental health issue, but can also predict if a person is prone to developing it in future. Our artificial intelligence and machine learning powered tools help do that job. Different solutions are provided (both free and paid) based on a person’s result and situation. End-to-end mental healthcare is provided on Lissun.” Apart from this, Lissun addresses people who are struggling with critical treatments (cancer, dialysis, IVF etc.) and suffer from stress, and depression, but are unaware of this. In such cases, clinical care, in addition to app-based services can give a holistic treatment and best outcomes, explains Singh.

“Organisations can no longer rely exclusively on human-delivered interventions. They need to use technology to deliver scalable, clinically-backed mental health care. Telemedicine is the tip of the iceberg, but it hasn’t made accessing care any easier. We still have the same number of clinicians we had before, but far more need. Virtual care is a much larger opportunity giving us the ability to reach as many people as possible,” says Michael Evers, CEO of Woebot Health.

Evers admits that while addressing many of the gaps in mental health care today, they are limited by the delivery modality. “That said, enabling mobile access and delivering mobile solutions to the areas of the world that are currently underserved is critical to economic, social and other aspects of human development, not just mental health. We’re hopeful that we’ll be able to reach even more people as development in underserved communities continues,” adds Evers.

Woebot Health was founded in 2017, by Alison Darcy, PhD, to make mental health totally accessible. The team develops AI-based products and tools that put scalable and clinically validated mental health solutions, based on decades of proven therapeutic approaches, including cognitive behavioural therapy (CBT), into the hands of those who need them most.

Michael Evers, CEO of Woebot Health

Evers elaborates: “Woebot is our first product. It’s an ally for people delivered via an app that provides immediate support to people experiencing symptoms of anxiety or depression. It has shown clinically meaningful symptom reduction in just two weeks and has exchanged millions of messages with 1.5 million users." It is to be the first of many similar products addressing different mental health issues and conditions.

“Our goal is not to replace human therapists. It is to make mental healthcare fundamentally accessible, and we believe AI is the missing link that can help people in their darkest moments. AI doesn’t judge, is always available, and meets you where you are. It can help the most vulnerable. Healthcare, including behavioural health integrated with physical health, is undergoing an end-to-end transformation. The massive restructuring to virtual, person-centred care will require deep and meaningful engagement,” adds Woebot’s Evers.

As mobile-tech begins to play a larger role in healthcare, validating its effectiveness demands high standards of outcome-based validity test results. “Our approach is to validate our technology at the highest levels through clinical validation and regulatory approval. We have a long track record of conducting scientific research, with four RCTs and 10 peer-reviewed publications to date. A lot of our clinical validation also comes from our work with healthcare providers, systems and academics. And we are preparing our first FDA regulated trial this year.” Evidence is critical, Evers adds, but he believes one cannot have urgency and evidence simultaneously. “As an industry, we need to rethink how we can be more effective with evidence, take incremental risks, and perform over time,” adds Evers.

According to GOQii, a preventive healthcare company’s comprehensive research published as the India Fit Reports: Stress and Mental Health study conducted in 2021 among 10,000+ respondents, indicates that 29.31 per cent of Indians are suffering from depression in 2021. The study also showed that people have been going through emotional breakdowns, they feel nervousness, tension, stress, anxiety, hopelessness and loneliness, and are experiencing troubled sleep. 39 per cent felt down, depressed or hopeless. In 2017 medical journal Lancet’s report on mental health indicated 197.3 million people in India, 14.3 per cent of the population, suffered from some form of mental illness in 2017.

Vishal Gondal, Founder and CEO, GOQii Smart Healthcare

“Scaling up is an issue in this space. The other significant challenge is that mental health issues are hard to identify and easy to dismiss. It’s easy to pay for treatment with something like diabetes, which is conclusive and quantifiable. In the case of mental health there is a societal appetite to be dismissive. Technology is needed to democratise mental health. In the next few years, technology will start to play a more integral and critical role in this space,” says Vishal Gondal, Founder and CEO, GOQii Smart Healthcare. GOQii Play, the online video interactive coaching platform within the GOQii App has emotional wellness coaches who conduct classes on various emotional and mental topics and people can interact with these coaches and get their queries answered.

Along similar lines, PD Hinduja Hospital and MRC’s Chavda points out how technology can improve access. “Un-served segments that technology can help are people who are located far from mental health professionals, those who do not want others to know they are seeking help, and those who cannot travel to a hospital or clinic due to physical or financial issues. It’s also useful in monitoring a patient and confirming that they are following the instructions that have been issued. The apps should be extremely user friendly and easy to access. Additionally, it should also include various languages for better communication. It should be standardised to the population and must ensure patient privacy.”

“In some situations, following SOPs that have been laid down would also help in understanding severe cases as there would be organised information about the patient. The intervention of AI wherein you could find nuances of sleep/movements of a patient who had Dementia, Alzheimer’s, or any other neurological condition to keep track before MHP steps in,” he adds.

According to CMHLP’s Lalwani, in this context, technology should be considered an important part of a comprehensive and multi-pronged strategy to address mental health problems as reflected in India’s National Mental Health Policy 2014. One must emphasise that technology cannot be a substitute for addressing social determinants of mental health (such as poverty, unemployment, discrimination, financial debt, lack of access to basic services), investing in community-based mental healthcare, enabling equitable access to quality mental health services, community-based rehabilitation and recovery services, and other resources which reduce the dependence on the formal mental health system, are affordable, and can be scaled, she adds.

Don’t take it easy: Privacy Policy

It finally boils down to how one chooses the right app. According to Dr Joshi, the application should tick a few key check-boxes. “Whether it is providing data safety and privacy, instructions are easy or not to evaluate oneself, easily understandable language and whether they are catering to the specific need of the client or not,” she adds.

It’s important to note here, that there is no requirement that all wellness apps conform to the Health Insurance Portability and Accountability Act, known as HIPAA, which governs the privacy of a patient’s health records. With respect to data farming, privacy policy compliance, data protection and prevention of security breaches are critical factors in mental health tech, and the government is paying attention. Patients are the prime owners of their health data, and health-care practitioners have access to them, explains Connect2MyDoctor’s Kutty. “As a company, we don’t own any data, and are just custodians of the data. All data is stored locally in India, UAE and Sydney as far as our platform is concerned. We make sure all employees are trained in the HIPAA guidelines to stay updated. As part of being fully HIPAA and General Data Protection Regulation (GDPR) compliant, all patient data is securely stored on our cloud server,” he adds.

Dr Balakrishnan points out that since there are so far no standard guidelines and since most apps are not regulated by any regulatory bodies each individual takes the responsibility to choose an app for themselves. There are a few researchers who work on reviewing mental health apps so the apps can be looked up at MIND APPS or One Mind Psyberguide. “Besides this individuals need to verify whether these apps are supported or advised by reputed mental health professionals. They should also check privacy and data policies and ensure they have options for crisis intervention,” he adds.

“We have expanded mindapps.org to cover 600 apps. Anyone can search the website today. We do not score or offer recommendations of the best app for anyone. Instead, we let users pick from over 100 filters (such as, cost, privacy, evidence) to learn which apps may be a good fit for what they are looking for. While we don’t have every app on the database, we are always excited to add more new ones as we learn about them,” elaborates Harvard Medical School’s Torous.

However, he adds that ensuring data privacy with apps is hard today. “The best protection is to check that the app offers some safeguards. These are usually in the privacy policy section of the app. We have worked to read and code the privacy policies to make it easier to learn what they do and do not promise.”

He emphasises that users must tread carefully. “It is okay to use these apps today, but to be cautious until we have better regulatory safeguards. With so many apps to pick from, you do not need to settle for one you are worried about or question if it protects your data,” he adds.

According to Woebot’s Evers, the whole service, which helps people identify and work through their innermost thoughts and feelings, is based on trust. “If people don’t trust us, we can’t help them. Long before we were legally required to do so, we were empowering people to delete their data or obtain a copy of it. And while we collect personal information, the user is always in control of that data. We believe standards are important, but they’re not enough. We use standard procedures defined by regulations, including GDPR and HIPAA, to anonymize and transmit user data. But compliance isn’t enough. We’re always exploring and challenging our thinking and constantly working to ensure the privacy and security of data even beyond what is legally required,” he adds. Policy and safeguards are always going to have a hard time keeping up with technology, and this is true not only true for all segments but health technologies too. The current mental healthcare system is completely insufficient (not enough providers, few individuals in treatment receive high-quality care), observes Stephen Schueller, Associate Professor,Department of Psychological Science,University of California, Irvine. He is also the Executive Director of OneMindPsyberGuide.org, Non-profit service that reviews mental-health apps. “So, I think mental healthcare is sorely in need of ‘responsible’ innovation.”

He believes an ethics framework that supports responsible innovation, is the need of the hour rather than more policy and regulatory safeguards. Kersi Chavda, Consultant Psychiatry, PD Hinduja Hospital and MRC emphasises that no technology is a panacea, nor are they free of unintended consequences. In the absence of a data governance framework in India, the ethical aspects of sensitive personal identifiers with cross-linkages to mental health profiles of individuals could be extremely harmful. “There have been anecdotal reports of mental health digital health platforms monetizing this sensitive data, so, there needs to be strong regulatory mechanisms to ensure that no harm is caused by well-intended technological innovations.”

Comprehensive Legislation

Technology in mental healthcare poses several ethical and privacy concerns such as protecting users’ privacy rights, how is the data processed, what is the data processed for, who is it shared with (role of third-parties), taking informed consent for collecting or processing data or taking reasonable security measures to prevent data breaches. Mental health apps often collect and process a large volume of data, including personally identifiable information and this poses many data security risks. This data, if collected without meaningful informed consent, not handled carefully or if shared with third parties (for commercial or non-commercial purposes) can violate privacy rights, lead to data breaches, profile individuals or unethically profit over the use of their personal data for unauthorised purposes. Interestingly, the Joint Parliamentary Committee on the Personal Data Protection Bill, 2019 recognizes that violation of privacy rights can be ‘psychologically detrimental’ for users and lead to depression, anxiety and suicides, says CHML’s Kapoor.

“At the same time, data is key in scientific research and developing evidence-based interventions which can help lead to better mental health outcomes. So, this is a complex issue which needs thought and deliberation by multiple stakeholders. Currently, India does not have a comprehensive legislation on data protection. The Information Technology Act, 2000 and its rules such as the Sensitive and Personal Data Information Rules (SPDI), 2011 and Intermediary Guidelines, 2021 provide some guidelines for ensuring data protection.”

For instance, the SPDI rules clearly mention that data related to physical, physiological or mental health conditions, sexual orientation and medical records must be protected according to the provisions of the IT Act and Rules. Additionally, the Health Data Management Policy under the National Digital Health Mission provides guidelines for management of health data and records.

However, the existing law and policy framework is inadequate and ambiguous regarding third parties in the context of mental health. “The Mental Healthcare Act, 2017 recognizes the right to confidentiality of persons with mental illness subject to exceptional situations. It also limits disclosure of personal information to the media without prior consent. The Personal Data Protection Bill 2019 (currently in Parliament) will have implications for regulating the privacy rights of citizens. Several concerns have been raised about how the Bill dilutes privacy rights of citizens. It remains to be seen what implications a comprehensive personal data protection legislation is likely to have for regulating technology-led mental health in India. The mental health sector needs to look at this more closely,” adds Kapoor.

The advent of mobile applications in the healthcare space has the opportunity to create a low-cost, first line of defence to alleviate the pressure, even if marginally, on a perennially strained healthcare system. Will they ever replace the need for trained medical professionals? Perhaps, not. Having said this, government intervention is warranted on the policy front, when it comes to issues regarding security, data-privacy and confidentiality.

Nonetheless, such applications can surely be utilised effectively as a means of preliminary diagnosis; a way to keep tabs on or track efficacy, improvements or progress of the treatment plan; and in conjunction with existing clinical management of the disease or condition. There is also no denying that more and more such tools entering the B2C market, being produced by subject-matter experts, is a positive step in the right direction.